How to detect ChatGPT plagiarism and why it's so difficult

Chatboty stały się teraz gorącym tematem, a ChatGPT jest najbardziej popularny. Akademicy, edukatorzy, redaktorzy i inni stają w obliczu rosnącej fali AI-generowanych plagiatów. Twoje istniejące narzędzia do wykrywania plagiatu mogą nie być w stanie odróżnić tego, co prawdziwe, od tego, co fałszywe.

- Mnóstwo opcji wykrywania

- Testing them

- Zamknięcie

W tym artykule, mówię trochę o tej koszmarnej stronie chatbotów AI, sprawdzam kilka narzędzi do wykrywania plagiatów online i badam, jak tragiczna stała się sytuacja.

Mnóstwo opcji wykrywania

Latest edition ChatGPT z listopada 2022 roku przez startup OpenAI w zasadzie postawiło sprawność chatbota w centrum uwagi. Dzięki niemu każdy, czy to zwykły Joe, czy profesjonalista, może tworzyć inteligentne, zrozumiałe artykuły lub eseje, a także rozwiązywać tekstowe problemy matematyczne. Treść stworzona przez AI jest łatwa do odczytania dla niedoświadczonego lub nieświadomego czytelnika. To dlatego uczniowie go kochają, a nauczyciele nienawidzą.

Wielkim wyzwaniem dla narzędzi do pisania AI jest ich obosieczna zdolność do wykorzystania języka naturalnego i gramatyki do budowania unikalnych i niemal zindywidualizowanych treści, nawet jeśli sama treść została zaczerpnięta z bazy danych. Oznacza to, że oszukiwanie oparte na AI jest w niebezpieczeństwie. Oto kilka opcji, które znalazłem, które są teraz darmowe.

GPT-2 Twórca ChatGPT – OpenAI stworzył Output Detector, aby pokazać, że potrafi wykryć tekst chatbota. Output Detector może być używany przez każdego. Użytkownicy muszą po prostu wpisać tekst, a narzędzie natychmiast poda ocenę, czy tekst został wysłany od człowieka.

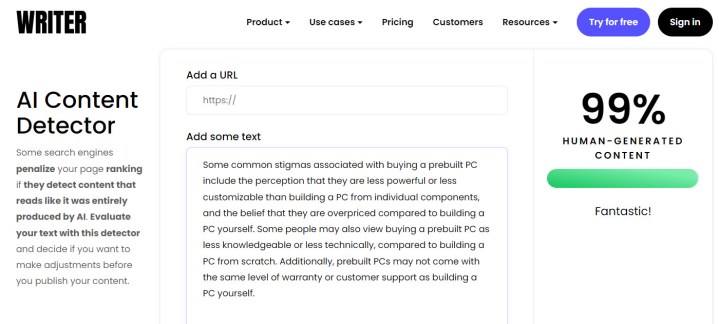

Dwa inne narzędzia z czystym UI to Writer AI Content Detector i Content at Scale. Możesz dodać adres URL (tylko pisarz), aby zeskanować treść lub ręcznie dodać tekst. Wyniki dadzą ci wynik procentowy, który wskazuje, jak prawdopodobne jest, że treść została wygenerowana przez człowieka.

GPTZero, narzędzie w wersji beta, które stworzył Edward Zen z Princeton University i umieścił na Streamlit, jest wersją domową. Od całej reszty różni się sposobem, w jaki model „algiaryzmu”, (AI-assisted plagiarising) prezentuje swoje wyniki. GPTZero dzieli metryki na perplexity (lub burstiness). Perplexity mierzy losowość zdania, natomiast burstiness mierzy ogólną losowość tekstu. Narzędzie przypisuje każdej metryce liczbę – im niższa liczba, tym większe prawdopodobieństwo, że tekst został stworzony automatycznie przez bota.

Dla zabawy dołączyłem Giant Language Model Test Room (GLTR), opracowany przez badaczy z MIT-IBM Watson AI Lab i Harvard Natural Language Processing Group. Nie pokazuje swoich końcowych wyników w wyraźnym rozróżnieniu na „człowieka” i „bota”, jak GPTZero. GLTR wykorzystuje boty do identyfikacji tekstu napisanego lub edytowanego przez boty. Boty są mniej skłonne niż ludzie do wybierania nieprzewidywalnych fraz słownych. Wyniki są przedstawione w kolorowym histogramie, który szereguje tekst wygenerowany przez AI w stosunku do tekstu wygenerowanego przez człowieka. Im więcej tekstu jest nieprzewidywalnego, tym większe prawdopodobieństwo, że jest to tekst wygenerowany przez człowieka.

Testing

Wszystkie te opcje mogą sprawić, że pomyślisz, iż jesteśmy w dobrym miejscu, jeśli chodzi o wykrywanie AI. Aby sprawdzić skuteczność każdego z narzędzi, chciałem zrobić to sam. Stworzyłem kilka przykładowych akapitów odpowiadających na pytania, które zadawałem również ChatGPT.

Moje pierwsze pytanie było proste: Dlaczego zakup fabrycznie złożonego komputera jest uważany za zły pomysł? Oto moje odpowiedzi na pytanie ChatGPT.

| My real writing | ChatGPT | ||||||||||||||||||

| GPT-2 Output Detector | 1. 18% fake | 36. 57% fake | |||||||||||||||||

| Writer AI | 100% human | 99% human | |||||||||||||||||

| Content at Scale | 99% human | 73% human | |||||||||||||||||

| GPTZero | 80 perplexity ChatGPT również oszukał większość z tych detektorów swoimi odpowiedziami. Uzyskał 99% ludzki wynik w aplikacji Writer AI Content Detector, na początek, i został oznaczony tylko 36% fałszywy przez detektor oparty na GPT. Microsoft potwierdził, że GPT-4 will appear in the week beginning March 13. . GLTR był najgorszym przestępcą, twierdząc, że moje słowa były równie prawdopodobne, że zostały napisane przez człowieka, jak ChatGPT.

Postanowiłem jednak dać mu jeszcze jedną szansę i tym razem odpowiedzi były znacznie lepsze. ChatGPT został poproszony o podsumowanie badań Szwajcarskiego Federalnego Instytutu Technologii nad zapobieganiem zamgleniu za pomocą cząstek złota. Aplikacje wykrywające lepiej poradziły sobie z zatwierdzeniem mojej odpowiedzi i wykryciem ChatGPT.

Trzy najlepsze testy naprawdę pokazały swoją siłę w tej odpowiedzi. GLTR nie był w stanie zobaczyć mojego pisania jako człowieka. Jednak udało się złapać ChatGPT. ClosingZ wyników każdego zapytania wynika, że internetowe detektory plagiatu nie są doskonałe. Aplikacje te są w stanie wykryć bardziej złożone prace pisemne (takie jak druga podpowiedź). Jednakże prostsze odpowiedzi są trudniejsze do wykrycia przez te aplikacje. Nie jest to jednak niezawodne. Czasami narzędzia wykrywające mogą błędnie sklasyfikować eseje lub artykuły jako wygenerowane przez ChatGPT. Jest to problem dla redaktorów lub nauczycieli, którzy polegają na nich w celu złapania oszustów. Programiści stale poprawiają dokładność i współczynnik fałszywych wyników. Jednakże, przygotowują się również do GPT-3, który może pochwalić się znacznie lepszym zestawem danych i bardziej złożonymi możliwościami niż GPT-2 (z którego ChatGPT jest szkolony). W tym momencie edukatorzy i redaktorzy będą musieli połączyć rozsądek z odrobiną ludzkiej intuicji, aby zidentyfikować treści wygenerowane przez AI. Użytkownicy chatbotów, którzy mają pokusę korzystania z chatbotów takich jak ChatGPT, ChatGPT czy Notion, aby przekazać swoją „pracę” jako legalną, nie powinni tego robić. Niezależnie od tego, jak na to spojrzeć, repurposing treści stworzonych w bazie danych bota jest plagiatem. |